The above diagram gives a high level overview of what my approach is to estimating the user's pose within an urban environment. I have so far been focusing on the computer vision side of things, trying to get a robust pose estimate from keypoint correspondences between what's seen through the camera's lens and a rectified image that serves as a natural marker. Getting this to work well on a mobile device has been quite a project in itself, and there are still plenty of challenges to solve there. But the past couple of weeks, I have been diving into the other sensors found on an iPad, iPhone, many of the top-of-the-line Android phones and tablets, and likely most portable media devices of the future: GPS, compass, gyroscopes, and accelerometers. These sensors can be combined to give a pose estimate as well (and this is extremely easy on a platform like iOS... the CoreMotion framework abstracts away a lot of the details, and I believe there is some rugged sensor fusion going on at the hardware level). Most of the existing "locative" augmented reality apps out there (like Layar, Wikitude, or Yelp Monocle) only use these sensors. This is problematic mainly because GPS does not give very precise or accurate position information, especially in an urban environment. GPS drifts, can be offset several meters due to multipath effects, and generally doesn't get you "close enough" to do a true pixel-perfect visual overlay onto the real world, so most apps that use sensor-based AR simply display floating information clouds and textual annotations rather than 3D graphics. Thus, I aim to combine vision- and sensor-based pose estimates for better results.

This video gives a nice overview of what the different sensors do and what they're each good and bad at.

Wednesday, April 20, 2011

Friday, April 8, 2011

Some sort of results

Outdoors, recognizing a building facade, on an iPad, at a reasonable framerate, just like I always wanted. Though in the frame I screencaptured, things aren't registering properly just yet, but oh well. It was light outside then, but we all know the best work gets done after sunset.

Tuesday, April 5, 2011

From Homography to OpenGL Modelview Matrix

This is the challenge of the week-- how do I get from a 3x3 homography matrix (which relates the plane of the source image to the plane found in the scene image) to an OpenGL modelview transformation matrix so I can start, you know, augmenting reality? The tricky thing is that while I can use the homography to project a 3D point onto the 2D image plane, I need separated rotation and translation vectors to feed OpenGL so it can set the location and orientation of the camera in the scene.

The easy answer seemed to be using OpenCV's cv::solvePnP() (or its C equivalent, cvFindExtrinsicCameraParams2()) by inputting four corners of the detected object calculated from the homography. But I'm getting weird memory errors with this function for some reason ("incorrect checksum for freed object - object was probably modified after being freed.

*** set a breakpoint in malloc_error_break to debug but setting a breakpoint on malloc_error_break didn't really help, and it isn't an Objective-C object giving me trouble, so NSZombiesEnabled won't be any help, etc etc arghhh....) AND it looks like it's possible to decompose a homography matrix into rotation and translation vectors which is all I really need (as long as I have the camera intrinsic matrix, which I found in the last post). solvePnP looks useful if I wanted to do pose estimation from a 3D structure, but I'm sticking to planes for now as a first step. OpenCV's solvePnP() doesn't look like it has an option to use RANSAC which seems important if many points are likely to be outliers-- an assumption that the Ferns-based matcher relies upon.

Now to figure out the homography decomposition... There are some equations here and some code here. I wish this were built into OpenCV. I will update as I find out more.

Update 1: The code found here was helpful. I translated it to C++ and used the OpenCV matrix libraries, so it required a little more work than a copy-and-paste. The 3x3 rotation matrix it produces is made up of the three orthogonal vectors that OpenGL wants (so they imply a rotation, but they're not three Euler angles or anything) which this image shows nicely:

Update 2: Forgot for a minute that OpenGL's fixed pipeline requires two transformation matrices: a modelview matrix (which I figure out above, based on the camera's EXtrinsic properties) and a projection matrix (which is based on the camera's INtrinsic properties). These resources might be helpful in getting the projection matrix.

Update 3: Ok, got it figured out. It's not pretty, but it works. I think I came across the same thing as this guy. Basically I needed to switch the sign on four out of nine elements of the modelview rotation matrix and two of the three components of the translation vector. The magnitudes were correct, but it was rotating backwards in the z-axis and translating backwards in the x- and y- axes. This was extremely frustrating. So, I hope the code after the jump helps someone else out...

The easy answer seemed to be using OpenCV's cv::solvePnP() (or its C equivalent, cvFindExtrinsicCameraParams2()) by inputting four corners of the detected object calculated from the homography. But I'm getting weird memory errors with this function for some reason ("incorrect checksum for freed object - object was probably modified after being freed.

*** set a breakpoint in malloc_error_break to debug but setting a breakpoint on malloc_error_break didn't really help, and it isn't an Objective-C object giving me trouble, so NSZombiesEnabled won't be any help, etc etc arghhh....) AND it looks like it's possible to decompose a homography matrix into rotation and translation vectors which is all I really need (as long as I have the camera intrinsic matrix, which I found in the last post). solvePnP looks useful if I wanted to do pose estimation from a 3D structure, but I'm sticking to planes for now as a first step. OpenCV's solvePnP() doesn't look like it has an option to use RANSAC which seems important if many points are likely to be outliers-- an assumption that the Ferns-based matcher relies upon.

Now to figure out the homography decomposition... There are some equations here and some code here. I wish this were built into OpenCV. I will update as I find out more.

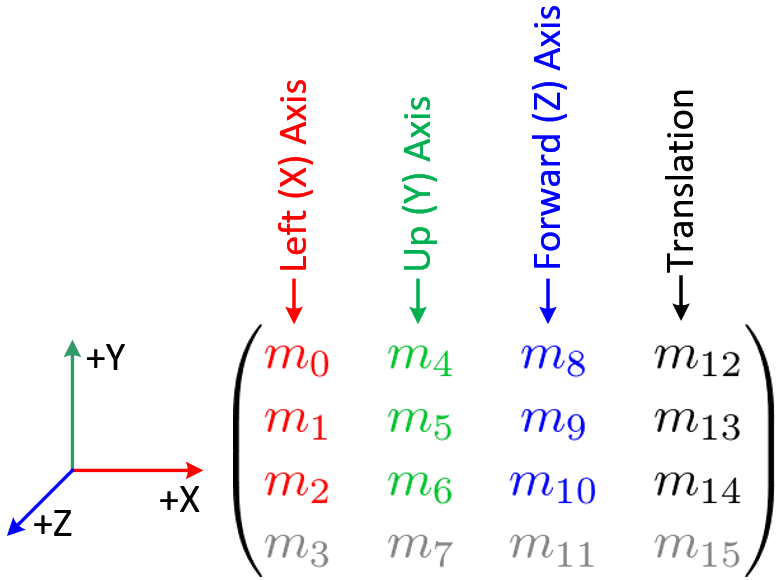

Update 1: The code found here was helpful. I translated it to C++ and used the OpenCV matrix libraries, so it required a little more work than a copy-and-paste. The 3x3 rotation matrix it produces is made up of the three orthogonal vectors that OpenGL wants (so they imply a rotation, but they're not three Euler angles or anything) which this image shows nicely:

|

| Breakdown of the OpenGL modelview matrix (via) |

The translation vector seems to be translating correctly as I move the camera around, but I'm not sure how it's scaled. Values seem to be in the +/- 1.0 range, so maybe they are in screen widths? Certainly they aren't pixels. Maybe if I actually understood what was going on I'd know better... Well, time to set up OpenGL ES rendering and try this out.

Update 2: Forgot for a minute that OpenGL's fixed pipeline requires two transformation matrices: a modelview matrix (which I figure out above, based on the camera's EXtrinsic properties) and a projection matrix (which is based on the camera's INtrinsic properties). These resources might be helpful in getting the projection matrix.

Update 3: Ok, got it figured out. It's not pretty, but it works. I think I came across the same thing as this guy. Basically I needed to switch the sign on four out of nine elements of the modelview rotation matrix and two of the three components of the translation vector. The magnitudes were correct, but it was rotating backwards in the z-axis and translating backwards in the x- and y- axes. This was extremely frustrating. So, I hope the code after the jump helps someone else out...

Monday, April 4, 2011

Offline camera calibration for iPhone/iPad-- or any camera, really

Creating a GUI to perform camera calibration on a mobile device like an iPhone or iPad sounded like more work than it would be worth, so I wrote a short program to do it offline. The cameras used on these devices can be assumed to be consistent within the same model, so it makes more sense for an app developer to have several precomputed calibration matrices available rather than asking the user to do this step on their own device.

The program I wrote is adapted from a tutorial I found to do camera calibration from live input. My version instead looks for a sequence of files name 00.jpg, 01.jpg, etc and calibrates from those. So the way I used it was to take several pictures of the checkerboard pattern from my iPad, upload them to my computer, edit out the rest of the stuff on my desktop in Photoshop so finding the corners was more likely to be correct, and rename them. The output of the program is two XML files which include the camera intrinsic parameters and distortion coefficients. The code for the program is attached after the jump.

And results:

Note: I found this precompiled private framework of OpenCV built for OSX rather handy. It is only built with 32-bit support, so set your target in XCode accordingly.

UPDATE: The primary point obtained from this calibration was wrong! It was throwing off the pose estimates at glancing angles. I set it to 320,240 and everything works better now...

The program I wrote is adapted from a tutorial I found to do camera calibration from live input. My version instead looks for a sequence of files name 00.jpg, 01.jpg, etc and calibrates from those. So the way I used it was to take several pictures of the checkerboard pattern from my iPad, upload them to my computer, edit out the rest of the stuff on my desktop in Photoshop so finding the corners was more likely to be correct, and rename them. The output of the program is two XML files which include the camera intrinsic parameters and distortion coefficients. The code for the program is attached after the jump.

And results:

f_x = 786.42938232

f_y = 786.42938232

c_x = 311.25384521 // See update below

c_y = 217.01358032 // See update below

And distortion coefficients were: -0.10786291, 1.23078966, -4.54779295e-03, -3.28966696e-03, -5.54199600

I hope I did that right. The center is slightly off from where it ideally should be (320, 240).

Note: I found this precompiled private framework of OpenCV built for OSX rather handy. It is only built with 32-bit support, so set your target in XCode accordingly.

UPDATE: The primary point obtained from this calibration was wrong! It was throwing off the pose estimates at glancing angles. I set it to 320,240 and everything works better now...

The Approach So Far

This project has been under development for a few months prior to the beginning of this blog, so I might as well explain some of the approach as it stands so far.

There are three "big picture" technical components to this project: The first is developing an efficient markerless camera-based AR system consisting of a keypoint detector, feature matcher and pose estimator on the mobile platform. Second, is sensor fusion with the other sensors available on a modern mobile device-- compass, GPS, gyroscope, and accelerometer. Third, is user interface design integrating these technologies into an easy-to-use app that can both build view augmented data as well as provide new user-generated data to grow the database.

So far, I have focused on building the general-purpose markerless AR system. I am using FAST Corner Detection to find keypoints in an image, followed by the Ferns classifier to match the keypoints as seen through the camera with those in a reference image, and then using RANSAC to calculate the homography mapping the reference image to the camera image. All the algorithms at this point are built into OpenCV, which I have compiled for iOS with some outside help.

(Speaking of iOS, I have tested this code both on an iPhone 4 and an iPad 2. The iPad 2 is significantly faster even without specific multithreaded programming techniques to take advantage of the dual core processor. I'm not exactly yet sure why this is, but maybe discovering why would reveal some unexpected bottlenecks in my code...)

The immediate next step is to convert the homography I find into an OpenGL modelview transformation matrix so I can start rendering something more interesting than a rectangle over my scene. Though rectangles are nice and satisfying after fighting compilation errors for days, and seeing new rectangles drawn at ~12fps is great after a few weeks trying out SURF descriptors. And even though I have something that remotely functions, there is plenty of room for optimization, especially in terms of compressing the Ferns so they don't hog so much memory. The ideas in this paper look like they might be half-implemented in the OpenCV Ferns code, though they are commented out.

More details as things progress...

There are three "big picture" technical components to this project: The first is developing an efficient markerless camera-based AR system consisting of a keypoint detector, feature matcher and pose estimator on the mobile platform. Second, is sensor fusion with the other sensors available on a modern mobile device-- compass, GPS, gyroscope, and accelerometer. Third, is user interface design integrating these technologies into an easy-to-use app that can both build view augmented data as well as provide new user-generated data to grow the database.

So far, I have focused on building the general-purpose markerless AR system. I am using FAST Corner Detection to find keypoints in an image, followed by the Ferns classifier to match the keypoints as seen through the camera with those in a reference image, and then using RANSAC to calculate the homography mapping the reference image to the camera image. All the algorithms at this point are built into OpenCV, which I have compiled for iOS with some outside help.

(Speaking of iOS, I have tested this code both on an iPhone 4 and an iPad 2. The iPad 2 is significantly faster even without specific multithreaded programming techniques to take advantage of the dual core processor. I'm not exactly yet sure why this is, but maybe discovering why would reveal some unexpected bottlenecks in my code...)

The immediate next step is to convert the homography I find into an OpenGL modelview transformation matrix so I can start rendering something more interesting than a rectangle over my scene. Though rectangles are nice and satisfying after fighting compilation errors for days, and seeing new rectangles drawn at ~12fps is great after a few weeks trying out SURF descriptors. And even though I have something that remotely functions, there is plenty of room for optimization, especially in terms of compressing the Ferns so they don't hog so much memory. The ideas in this paper look like they might be half-implemented in the OpenCV Ferns code, though they are commented out.

More details as things progress...

Subscribe to:

Comments (Atom)